Late binding is a programming term that I’ve commandeered for Crowbar’s DevOps design objectives.

Late binding is a programming term that I’ve commandeered for Crowbar’s DevOps design objectives.

We believe that late binding is a best practice for CloudOps.

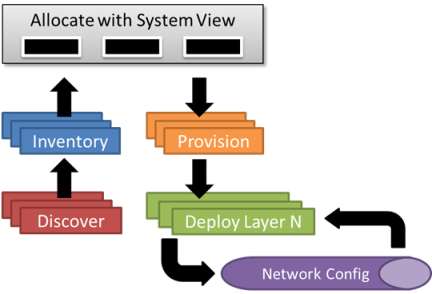

Understanding this concept is turning out to be an important but confusing differentiation for Crowbar. We’ve effectively inverted the typical deploy pattern of building up a cloud from bare metal; instead, Crowbar allows you to build a cloud from the top down. The difference is critical – we delay hardware decisions until we have the information needed to do the correct configuration.

If Late Binding is still confusing, the concept is really very simple: “we hold off all work until you’ve decided how you want to setup your cloud.”

Late binding arose from our design objectives. We started the project with a few critical operational design objectives:

- Treat the nodes and application layers as an interconnected system

- Realize that application choices should drive down the entire application stack including BIOS, RAID and networking

- Expect the entire system to be in a constantly changing so we must track state and avoid locked configurations.

We’d seen these objectives as core tenets in hyperscale operators who considered bare metal and network configuration to be an integral part of their application deployment. We know it is possible to build the system in layers that only (re)deploy once the application configuration is defined.

We have all this great interconnected automation! Why waste it by having to pre-stage the hardware or networking?

In cloud, late binding is known as “elastic computing” because you wait until you need resources to deploy. But running apps on cloud virtual machines is simple when compared to operating a physical infrastructure. In physical operations, RAID, BIOS and networking matter a lot because there are important and substantial variations between nodes. These differences are what drive late binding as a one of Crowbar’s core design principles.

“Late Binding” as a term has a history of pejorative use. Here, we’re gaining so much from configuring last what was normally configured first.

I often trip over this. I know I want a Hadoop system, so I start buying lots of hardware and drives. But it’s so hard to size the purchase correctly when I don’t have a real sense of my usage stats, so I end up specifying at least some of the wrong gear. I need to focus on the fact that I can deploy Hadoop with Crowbar onto general purpose hardware, and then watch and measure its usage and performance. When I really know what hardware I need, based on actual stats collected from my live systems, I can order it. Crowbar will make it easy for me to expand my cluster to make use of, and even prioritize the new gear. That’s some VERY “late binding.”

Keep at it!

LikeLike

Reblogged this on More Mind Spew-age from Harold Spencer Jr. and commented:

Late binding is an interesting programming term. It really sheds light as to how much DevOps has been continually evolving.

LikeLike

Pingback: Crowbar 2.0 Design Summit Notes (+ open weekly meetings starting) « Rob Hirschfeld's Blog

Pingback: Seeking OpenStack Foundation Board Seat for 2013 (please nominate me) « Rob Hirschfeld's Blog

Pingback: OpenCrowbar.Anvil released – hammering out a gold standard in open bare metal provisioning | Rob Hirschfeld

Pingback: OpenCrowbar Design Principles: Late Binding [Series 3 of 6] | Rob Hirschfeld

Pingback: API Driven Metal = OpenCrowbar + Chef Provisioning | Rob Hirschfeld