Joining us this week is Ian Rae, CEO and Founder CloudOps who recorded the podcast during the Google Next conference in 2018.

Highlights

- 1 min 55 sec: Define Cloud from a CloudOps perspective

- Business Model and an Operations Model

- 3 min 59 sec: Update from Google Next 2018 event

- Google is the “Engineer’s Cloud”

- Google’s approach vs Amazon approach in feature design/release

- 9 min 55 sec: Early Amazon ~ no easy button

- Amazon educated the market as industry leader

- 12 min04 sec: What is the state of Hybrid? Do we need it?

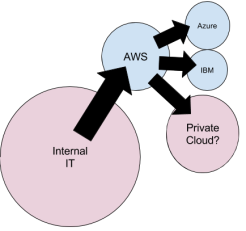

- Complexity of systems leads to private, public as well as multiple cloud providers

- Open source enabled workloads to run on various clouds even if the cloud was not designed to support a type of workload

- Google’s strategy is around open source in the cloud

- 14 min 12 sec: IBM visibility in open source and cloud market

- Didn’t build cloud services (e.g. open a ticket to remap a VLAN)

- 16 min 40 sec: OpenStack tied to compete on service components

- Couldn’t compete without Product Managers to guide developers

- Missed last mile between technology and customer

- Didn’t want to take on the operational aspects of the customer

- 19 min 31 sec: Is innovation driven from listening to customers vs developers doing what they think is best?

- OpenStack is seen as legacy as customers look for Cloud Native Infrastructure

- OpenStack vs Kubernetes install time significance

- 22 min 44 sec: Google announcement of GKE for on-premises infrastructure

- Not really On-premise; more like Platform9 for OpenStack

- GKE solve end user experience and operational challenges to deliver it

- 26 min 07 sec: Edge IT replaces what is On-Premises IT

- Bullish on the future with Edge computing

- 27 min 27 sec: Who delivers control plane for edge?

- Recommends Open Source in control plan

- 28 min 29 sec: Current tech hides the infrastructure problems

- Someone still has to deal with the physical hardware

- 30 min 53 sec: Commercial driver for rapid Edge adoption

- 32 min 20 sec: CloudOps building software / next generation of BSS or OSS for telco

- Meet the needs of the cloud provider for flexibility in generating services with the ability to change the service backend provider

- Amazon is the new Win32

- 38 min 07 sec: Can customers install their own software? Will people buy software anymore?

- Compare payment models from Salesforce and Slack

- Google allowing customers to run their technology themselves of allow Google to manage it for you

- 40 min 43 sec: Wrap-Up

Podcast Guest: Ian Rae, CEO and Founder CloudOps

Ian Rae is the founder and CEO of CloudOps, a cloud computing consulting firm that provides multi-cloud solutions for software companies, enterprises and telecommunications providers. Ian is also the founder of cloud.ca, a Canadian cloud infrastructure as a service (IaaS) focused on data residency, privacy and security requirements. He is a partner at Year One Labs, a lean startup incubator, and is the founder of the Centre cloud.ca in Montreal. Prior to clouds, Ian was responsible for engineering at Coradiant, a leader in application performance management.

Here’s the summary:

Here’s the summary: