One of IBM’s major announcements at Think 2018 was Managed Kubernetes on Bare Metal. This new offering combines elements of their existing offerings to expose some additional security, attestation and performance isolation. Bare metal has been a hot topic for cloud service providers recently with AWS adding it to their platform and Oracle using it as their primary IaaS. With these offerings as a backdrop, let’s explore the role of bare metal in the 2020 Data Center (DC2020).

Physical servers (aka bare metal) are the core building block for any data center; however, they are often abstracted out of sight by a virtualization layer such as VMware, KVM, HyperV or many others. These platforms are useful for many reasons. In this post, we’re focused on the fact that they provide a control API for infrastructure that makes it possible to manage compute, storage and network requests. Yet the abstraction comes at a price in cost, complexity and performance.

The historical lack of good API control has made bare metal less attractive, but that is changing quickly due to two forces.

These two forces are Container Platforms and Bare Metal as a Service or BMaaS (disclosure: RackN offers a private BMaaS platform called Digital Rebar). Container Platforms such as Kubernetes provide an application service abstraction level for data center consumers that eliminates the need for users to worry about traditional infrastructure concerns. That means that most users no longer rely on APIs for compute, network or storage allowing the platform to handle those issues. On the other side, BMaaS VM infrastructure level APIs for the actual physical layer of the data center allow users who care about compute, network or storage the ability to work without VMs.

The combination of containers and bare metal APIs has the potential to squeeze virtualization into a limited role.

The IBM bare metal Kubernetes announcement illustrates both of these forces working together. Users of the managed Kubernetes service are working through the container abstraction interface and really don’t worry about the infrastructure; however, IBM is able to leverage their internal bare metal APIs to offer enhanced features to those users without changing the service offering. These benefits include security (IBM White Paper on Security), isolation, performance and (eventually) access to metal features like GPUs. While the IBM offering still includes VMs as an option, it is easy to anticipate that becoming less attractive for all but smaller clusters.

The impact for DC2020 is that operators need to rethink how they rely on virtualization as a ubiquitous abstraction. As more applications rely on container service abstractions the platforms will grow in size and virtualization will provide less value. With the advent of better control of the bare metal infrastructure, operators have real options to get deep control without adding virtualization as a requirement.

Shifting to new platforms creates opportunities to streamline operations in DC2020.

Even with virtualization and containers, having better control of the bare metal is a critical addition to data center operations. The ideal data center has automation and control APIs for every possible component from the metal up.

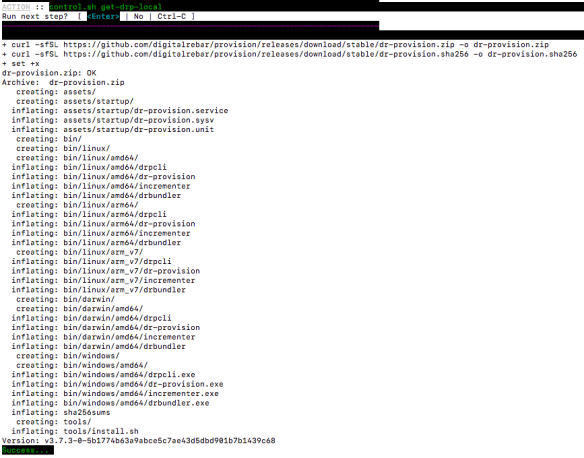

Learn more about the open source Digital Rebar community: