Last week Dave McCrory (@McCrory) and I (@Zehicle) had the benefit of intensive Azure training at Microsoft HQ to support Dell’s Azure Stamp.

We’ve assembled a top 20 list of things to know about programming for Azure (and really any PaaS leaning cloud):

- If you want performance, optimize to reduce fees. Azure (and any cloud) is architected to penalize you if you use their resources poorly. The challenge is to fix this before your boss get the tab for your unenlightened design decisions.

- Coding .NET on Azure easy, architecting for Azure requires learning. Clouds put things in different places than you are used to and the rules are different. Expect a learning curve.

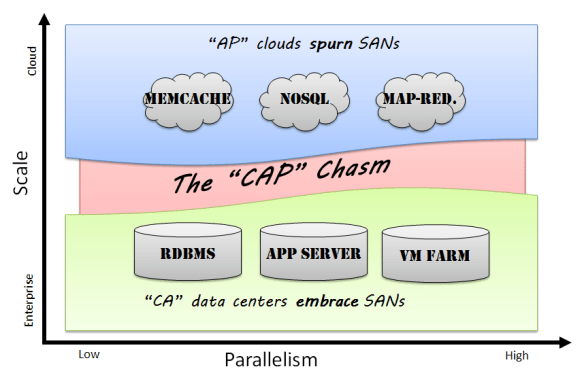

- Partitioning = parallelism. Learn to love partitions in all their forms, because your app will be throttled if you throw everything into a single partition! On the upside, each partition operates in parallel and even better, they usually don’t cost extra (SQL is the exception).

- Roles are flexible. You can run web servers (Apache, etc) on a worker and worker tasks on a web role. This is a good way to save some change since you pay per role instance. It’s counter to separation of concerns, but financially you should also combine workers into a single role where possible.

- Understand walking deployments. You can (and should) have simultaneous versions of the code operating against the same data so that you can roll upgrades (ala Timothy Fitz/Eric Ries) to reduce risk and without reducing performance. You should expect your data schema to simultaneously span mutiple code versions.

- Learn about Update Domains (UDs). Deployment domains allow rolling upgrades and changes to Applications and Services. They are part of how you partition your overall application. If you’re planning a VIP swap deployment, then you won’t care.

- Each service = ONE external IP. You can have many VMs backing each service (and multiple roles in a service) and Azure will load balance between them so you can scale out each service. Think of each service as a clonable entity: there will be at least 1 and more can be added if you want to scale.

- Understand between VIP and DIP. VIPs stand for Virtual IPs and are external, public, and metered. DIPs are internal, private, and load balanced. Azure provides an API to discover your DIPs – do not assume you know them because they are DYNAMIC IPs. Azure won’t let you see other DIPs inside the system.

- Azure has rich diagnostics, but beware. Azure leverages the existing diagnostics built into their system, but has to get the data off box since instances are volitile. This means that problems can be hard to isolate while excessive logging can impact performance and generate fees. Microsoft lets you target individual systems for elevated levels. You can also Terminal Server to a VM for troubleshooting (with caution).

- The new Azure admin console rocks. Take your pick between Silverlight or MMC Snap-in.

- Everything goes into Azure Storage. Learn to love it. Queues -> storage. Tables -> storage. Blobs -> storage. Logging -> storage. Code Repo -> storage. vDisk -> storage. SQL -> SQL (they march to their own drummer).

- Queues are essential, but tricky. Learn the meaning of idempotent because using queues requires you to handle failures and timeouts. The scary part is that it will work nicely until you exceed some limits and then you’ll experience cascading failure. Whee! Oh yea, and queues require polling (which stinks as a notification model).

- SQL Azure is

justmostly like MS SQL. Microsoft did a smart thing in keeping Cloud SQL so it was highly compatible with Local SQL. The biggest note is that limited in size of partition. If you embrace the size limits you will get better performance. So stop pushing BLOBs into databases and start sharding. - Duplicating data in tables will improve performance. This has to do with how partitions and keys operate but is an interesting architecture for NoSQL – stage data for use. Don’t be afraid to stage the same data in multiple ways. It may be faster/cheaper to write data twice if it becomes easier to find when you search it 1000s of times.

- Table data can be “warmed up.” Storage has logic that makes frequently accessed items faster (sort of like a cache ;). If you can anticipate load spikes then you should warm the data just before the spike.

- Storage billing is both amount and transactions. You can get burned on a small, but busy set of data. Note: you will pay even if you 404 a request.

- Azure has a CDN. Leveraging Microsoft’s Content Delivery Network (CDN) will improve performance for your users with small, low latency, high request items. You need to change your URLs for those assets. Best practice is to use some versioning in the URI so that you can force changes. Remember, CDN is SLOWER for the first hit when the data is not in cache so avoid CDN for low volume assets!

- Provisioning time is not instant. Azure needs anywhere from 1-3 minutes to spin a new instance of a role. Build this lag into your architecture and dynamic scale plans. New databases and partitions are fast.

- The VM Role is maintained by YOU. Using the VM role is a handy shortcut, but has a long list of gotcha’s. Some of note: 1) the VM can be “reset” to the last VM image state that you uploaded, 2) you are responsble for VM OS upgrades and patches, 3) VMs must be clonable because they will operate in parallel.

- Azure supports more than .NET. You can setup anything in a worker (and now VM) role, but there are nuances to doing this effectively. You really need to understand how Azure works and had better be ready to crack open Visual Studio for some things even if you’re writing in Java.

We hope this list helps you navigate Azure deployments. No matter what cloud you use, understanding Azure’s architecture will help you write better cloud scale applications.

We’d love to hear your suggestions and recommendations!

Mirrored on both blogs: Rob Hirschfeld’s Blog & Dave McCrory’s Blog