The last few weeks for my team at Dell have been all about testing as Crowbar goes through our QA cycle and enters field testing. These activities are the run up to Dell open sourcing the bits.

The Crowbar testing cycle drove two significant architectural changes that are interesting as general challenges and important in the details for Crowbar adopters.

The Crowbar testing cycle drove two significant architectural changes that are interesting as general challenges and important in the details for Crowbar adopters.

Challenge #1: Configuration Sequence.

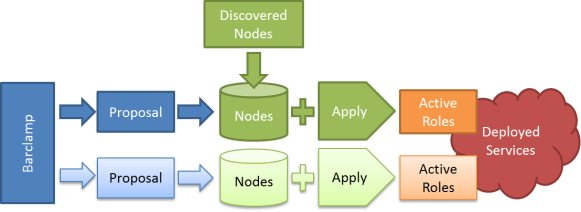

Crowbar has control of every step of deployment from discovery, BIOS/RAID configuration, base image, core services and applications. That’s a great value prop but there’s a chicken and egg problem: how do you set the RAID for a system when you have not decided which applications you are going to install on it?

The urgency of solving this problem became obvious during our first full integration tests. Nova and Swift need very different hardware configurations. In our first Crowbar flows, we would configure the hardware before you selected the purpose of the node. This was an effect of “rushing” into a Chef client ready state.

We also needed a concept of collecting enough nodes to deploy a solution. Building an OpenStack cloud requires that you have enough capacity to build the components of the system in the correct sequence.

Our solution was to inject a “pause” state just after node discovery. In the current Crowbar state machine, nodes pause after discovery. This allows you to assign them into the roles that you want them to play in your system.

In testing, we’ve found that the pause state helps manage the system deployment; however, it also added a new user action requirement.

Challenge #2: Multi-Master Updates

In Chef, the owner of a node’s data in the centralized database is the node, not the server. This is a logical (but not a typical) design pattern and has interesting side effects. Specifically, updates from Chef Client runs on the nodes are considered authoritative and will over-write changes made on the server.

This is correct behavior because Chef’s primary focus is updating the node (edge) and not the central system (core). If the authority was reversed then we would miss critical changes that Chef effected on the nodes. From this perspective, the server is a collection point for data that is owned/maintained at the nodes.

Unfortunately, Crowbar’s original design was to inject configuration into the Chef server’s node objects. We found that Crowbar’s changes could be silently lost since the server is not the owner of the data. This is not a locking issue – it is a data ownership issue. Crowbar was not talking to the master of the data when it made updates!

To correct this problem, we (really Greg Althaus in a coding blitz) changed Crowbar to store data in a special role mapped to each node. This works because roles are mastered on the server. Crowbar can make reliable updates to the node’s dedicated role without worrying the remote data will override changes.

This pattern is a better separation of concerns because Crowbar and barclamp configuration in stored in a very clearly delineated location (a role named crowbar-[node] and is not mixed with edge configuration data.

It turns out that these two design changes are tightly coupled. Simultaneous edge/server writes became very common after we added the pause state. They are infrequent for single node changes; however, the frequency increases when you are changing a system of interconnected nodes through multiple state.

More simply put: Crowbar is busy changing the node configs at the exactly same time the nodes are busy changing their own configuration.

Whew! I hope that helped clarify some interesting design considerations behind Crowbar design.

Note: I want to repeat that Crowbar is not tied to Dell hardware! We have modules that are specifically for our BIOS/RAID, but Crowbar will happily do all the other great deployment work if those barclamps are missing.

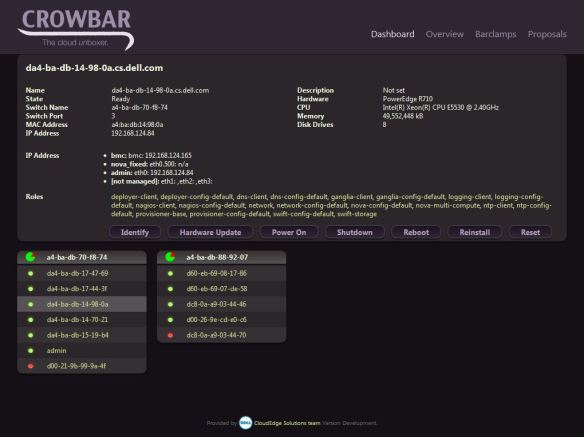

These Crowbar videos are the first two in a series of how to setup and use your own local Crowbar dev environment (here’s more info & the ISO). I used VMware Workstation, but any virtual hosts that support Ubuntu 10.10 will work fine. We use ESX, KVM and Xen for testing too.

These Crowbar videos are the first two in a series of how to setup and use your own local Crowbar dev environment (here’s more info & the ISO). I used VMware Workstation, but any virtual hosts that support Ubuntu 10.10 will work fine. We use ESX, KVM and Xen for testing too.

Original Post

Original Post