There’s a surprising about of a hair pulling regarding IaaS vs PaaS. People in the industry get into shouting matches about this topic as if it mattered more than Lindsay Lohan’s journey through rehab.

The cold hard reality is that while pundits are busy writing XaaS white papers, developers are off just writing software. We are writing software that fits within cloud environments (weak SLA, small VMs), saves money (hosted data instead of data in VMs), and changes quickly (interpreted languages). We’re doing using an expanding tool kit of networked components like databases, object stores, shared cache, message queue, etc.

Using network components in an application architecture is about as novel as building houses made of bricks. So, what makes cloud architectures any better or different?

Nothing! There is no difference if you buy VMs, install services, and wire together your application in its own little cloud bubble. If I wanted to bait trolls, I’d call that an IaaS deployment.

However, there’s an emerging economic driver to leverage lower cost and more elastic infrastructure by using services provided by hosts rather than standing them up in a VM. These services replace dedicated infrastructure with managed network attached services and they have become a key differentiator for all the cloud vendors

- At Google App Engine, they include Big Tables, Queues, MemCache, etc

- At Microsoft Azure, they include SQL Azure, Azure Storage, AppFabric, etc

- At Amazon AWS, they include S3, SimpleDB, RDS (MySQL), Queue & Notify, etc

Using these services allows developers to focus on the business problems we are solving instead of building out infrastructure to run our applications. We also save money because consuming an elastic managed network service is less expensive (and more consumption based) than standing up dedicated VMs to operate the services.

Ultimately, an application can be written as stateless code (really “externalized state” is more a accurate description) that relies on these services for persistence. If a host were to dynamically instantiate instances of that code based on incoming requests then my application resource requirements would become strictly consumption based. I would describe that as true cloud architecture.

On a bold day, I would even consider an environment that enforced offered that architecture to be a platform. Some may even dare to describe that as a PaaS; however, I think it’s a mistake to look to the service offering for the definition when it’s driven by the application designers’ decisions to use network services.

While we argue about PaaS vs IaaS, developers are just doing what they need. Today they may stand-up their own services and tomorrow they incorporate 3rd party managed services. The choice is not a binary switch, a layer cake, or a holy war.

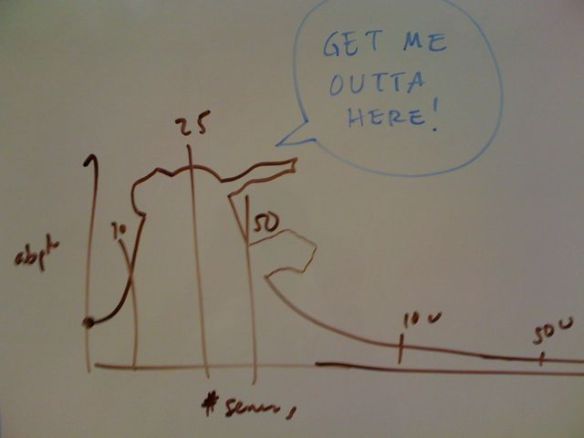

The choice is about choosing the right most cost effective and scalable resource model.

?

?

I’m growing more and more concerned about the preponderance of Frankencloud offerings that I see being foisted into the market place (no, my employer,

I’m growing more and more concerned about the preponderance of Frankencloud offerings that I see being foisted into the market place (no, my employer,