I’ve been watching a pattern emerge on the semiannual OpenStack release cycles for a while now. There is a hidden but crucial development phase that accelerates projects faster than many observers realize. In fact, I believe that substantial work is happening outside of the “normal” design cycle during what I call “free fall” development.

Understanding when the cool, innovative stuff happens is essential to getting (and giving) the most from OpenStack.

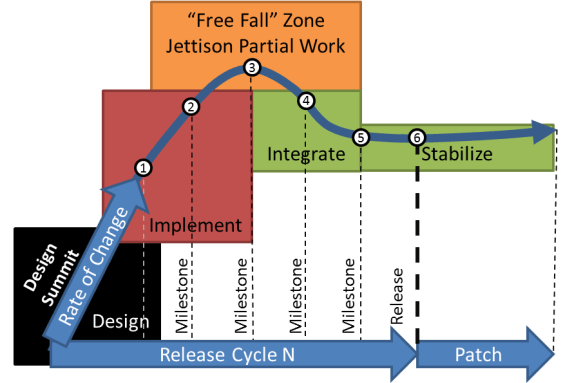

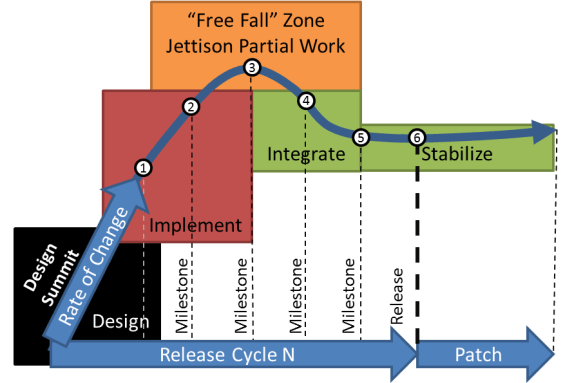

The published release cycle looms like a 6 stage ballistic trajectory. Launching at the design summit, the release features change and progress the most in the first 3 milestones. At the apogee of the release, maximum velocity is reached just as we start having to decide which features are complete enough to include in the release. Since many are not ready, we have to jettison (really, defer) partial work to ensure that we can land the release on schedule.

I think of the period where we lose potential features as free fall because thing can go in any direction. The release literally reverses course: instead of expanding, it is contracting. This process is very healthy for OpenStack. It favors code stability and “long” hardening times. For operators, this means that the code stops changing early enough that we have more time to test and operationalize the release.

But what happens to the jettisoned work? In free fall, objects in motion stay in motion. The code does not just disappear! It continues on its original upward trajectory.

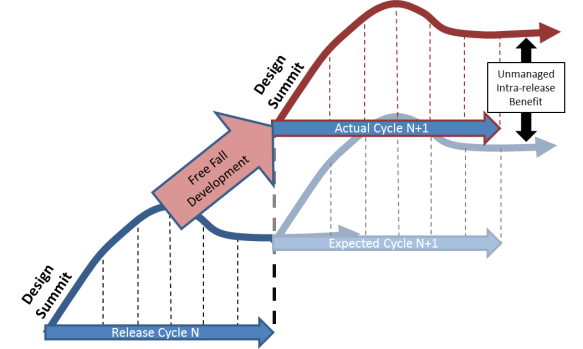

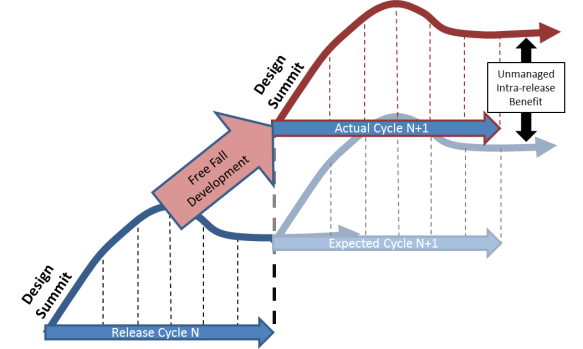

The developers who invested time in the code do not simply take a 3 month sabbatical, nor do they stop their work and start testing the code that was kept. No, after the short in/out sorting pause, the free fall work continues onward with rockets blasting. The challenge is that it is now getting outside of the orbit of the release plan and beyond the radar of many people who are tracking the release.

The consequence of this ongoing development is that developers (and the features they are working on) show up at the summit with 3 extra months of work completed. It also means that OpenStack starts each release cycle with a bucket of operationally ready code. Wow, that’s a huge advantage for the project in terms of delivered work, feature velocity and innovation. Even better, it means that the design summit can focus on practical discussions of real prototypes and functional features.

Unfortunately, this free fall work has hidden costs:

- It is relatively hidden because it is outside of the normal release cycle.

- It makes true design discussions less productive because the implemented code is more likely to make the next release cycle

- Integration for the work is postponed because it continues before branching

- Teams that are busy hardening a core feature can be left out of work on the next iteration of the same feature

- Forking can make it hard to capture bugs caught during hardening

I think OpenStack greatly benefits from free fall development; consequently, I think we need to acknowledge and embrace it to reduce its costs. A more explicit mid-release design synchronization when or before we fork may help make this hidden work more transparent.

I am humbled by the community

I am humbled by the community  If registered, you have 8 votes to allocate as you wish. You will get a link via email – you must use that link.

If registered, you have 8 votes to allocate as you wish. You will get a link via email – you must use that link.

I could not be happier with the results Crowbar collaborators and

I could not be happier with the results Crowbar collaborators and