In my work queue at Dell, the request for a “cloud taxonomy” keeps turning up on my priority list just behind world dominance peace. Basically, a cloud taxonomy is layer cake picture that shows all the possible cloud components stacked together like gears in an antique Swiss watch. Unfortunately, our clock-like layer cake has evolved to into a collaboration between the Swedish Chef and Rube Goldberg as we try to accommodate more and more technologies into the mix.

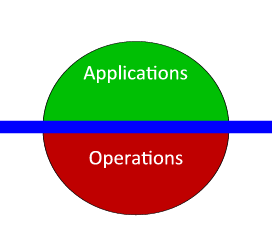

The key to de-spaghettifying our cloud taxomony was to realize that clouds have two distinct sides: an external well-known API and internal “black box” operations. Each side has different objectives that together create an elastic, scalable cloud.

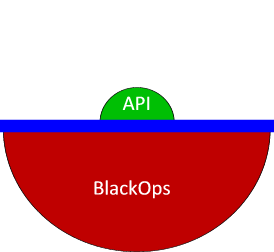

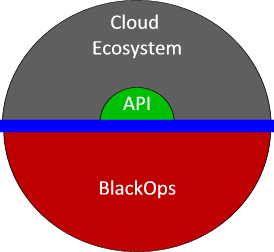

The objective of the API side is to provide the smallest usable “surface area” for cloud consumers. Surface area describes the scope of the interface that is published to the users. The smaller the area, the easier it is for users to comprehend and harder it is for them to break. Amazon’s EC2 & S3 APIs set the standards for small surface area design and spawned a huge cloud ecosystem.

To understand the cloud taxonomy, it is essential to digest the impact of the cloud ecosystem. The cloud ecosystem exists primarily beyond the API of the cloud. It provides users with flexible options that address their specific use cases. Since the ecosystem provides the user experience on top of the APIs (e.g.: RightScale), it frees the cloud provider to focus on services and economies of scale inside the black box.

The objective of the internal side of clouds is to create a prefect black box to give API users the illusion of a perfectly performing, strictly partitioned and totally elastic resource pool. To the consumer, it does should not matter how ugly, inefficient, or inelegant the cloud operations really are; except, of course, that it does matter a great deal to the cloud operator.

Cloud operation cannot succeed at scale without mastering the discipline of operating the black box cloud (BlackOps).

The BlackOps challenge is that clouds cannot wait until all of the answers are known because issues (or solutions) to scale architecture are difficult to predict in advance. Even worse, taking the time to solve them in advance likely means that you will miss the market.

Since new technologies and approaches are constantly emerging, there is no “design pattern” for hyperscale. To cope with constant changes, BlackOps live by seven tenants that help manage their infrastructure efficiently in a dynamic environment.

- Operational ownership – don’t wait for all the king’s horses and consultants to put your back together again (but asking for help is OK).

- Simple APIs – reduce the ways that consumers can stress the system making the scale challenges more predictable.

- Efficiency based financial incentives – customers will dramatically modify their consumption if you offer rewards that better match your black box’s capabilities.

- Automated processes & verification – ensures that changes and fixes can propagate at scale while errors are self-correcting.

- Frequent incremental rolling adjustments – prevents the great from being the enemy of the good so that systems are constantly improving (learn more about “split testing”)

- Passion for operational simplicity – at hyperscale, technical debt compounds very quickly. Debt translates into increased risk and reduced agility and can infect hardware, software, and process aspects of operations.

- Hunger for feedback & root-cause knowledge – if you’re building the airplane in flight, it’s worth taking extra time to check your work. You must catch problems early before they infect your scale infrastructure. The only thing more frustrating than fixing a problem at scale, if fixing the same problem multiple times.

It’s no surprise that these are exactly the Agile & Lean principles. The pace of change of cloud is so fast and fluid, that BlackOps must use an operational model that embraces iterative and rolling deployment.

Compared to highly orchestrated traditional IT operations, this approach seems like sending a team of ninjas to battle on quicksand with objectives delivered in a fortune cookie.

I am not advocating fuzzy mysticism or by-the-seat-of-your-pants do-or-die strategies. BlackOps is a highly disciplined process based on well understood principles from just-in-time (JIT) and lean manufacturing. Best of all, they are fast to market, able to deliver high quality and capable of responding to change.

Post Script / Plug: My understanding of BlackOps is based on the operational model that Dell has introduced around our OpenStack Crowbar project. I’m going to be presenting more about this specific topic at the OpenStack Design Conference next week.