Joining in the blackout; however, I’ll defer to more articulate bloggers on this topic.

CloudOps white paper explains “cloud is always ready, never finished”

I don’t usually call out my credentials, but knowing the I have a Masters in Industrial Engineering helps (partially) explain my passion for process as being essential to successful software delivery. One of my favorite authors, Mary Poppendiek, explains undeployed code as perishable inventory that you need to get to market before it loses value. The big lessons (low inventory, high quality, system perspective) from Lean manufacturing translate directly into software and, lately, into operation as DevOps.

What we have observed from delivering our own cloud products, and working with customers on thier’s, is that the operations process for deployment is as important as the software and hardware. It is simply not acceptable for us to market clouds without a compelling model for maintaining the solution into the future. Clouds are simply moving too fast to be delivered without a continuous delivery story.

This white paper [link here!] has been available since the OpenStack conference, but not linked to the rest of our OpenStack or Crowbar content.

Austin OpenStack Meetup (January Minutes) + OpenStack Foundation Web Cast!

Sorry for the brevity… At the last Austin OpenStack meetup, we had >60 stackers! Some from as far away as Portland and Boston (as in Oregon and Massachusetts).

Sorry for the brevity… At the last Austin OpenStack meetup, we had >60 stackers! Some from as far away as Portland and Boston (as in Oregon and Massachusetts).

Notes:

- Suse introduced their OpenStack beta and talked about their Suse Studio that can deploy images against the OpenStack APIs

- I showed off DevStack.org code that can setup the truck of OpenStack (now Essex) in about 10 minutes on a single node. Great for developers!

- I showed an OpenStack Diablo Final deployment from Crowbar. I focused mainly on Dashboard and used our reference architecture (see below) as illustration of the many parts.

- Matt Ray suggested everyone watch the webcasts about the OpenStack Foundation (Thurs 6pm central & Friday 9am central)

- We planned the next few meetups.

- For February, we’ll talk about Swift and Dashboard.

- For March, we’ll talk about Essex and DevStack to prep for the next design summit (in SF).

- For April, we’ll debrief the conference

Thank you Suse and Dell (my employer) for sponsoring! The next meetup is sponsored by Canonical.

Early crop of Crowbar 1.3 features popping up

My team at Dell is still figuring out some big items for the 1.3 release; however, somethings were just added that is worth calling out.

My team at Dell is still figuring out some big items for the 1.3 release; however, somethings were just added that is worth calling out.

- Ubuntu 11.04 support! Thanks to Justin Shepherd from Rackspace Cloud Builders!

- Alias names for nodes in the UI

- User managed node groups in the UI

- Ability to pre-populate the alias, description and group for a node (not integrated with DNS yet)

- Hadoop is working again – we addressed the missing Ganglia repo issue. Thanks to Victor Lowther.

Also, I’ve spun new open source ISOs with the new features. User beware!

January OpenStack Meetup next Tuesday 1/10 focus on Operation/Install

A reminder that we’re having an OpenStack meetup in Austin next Tuesday (http://www.meetup.com/OpenStack-Austin/events/44184682/).

A reminder that we’re having an OpenStack meetup in Austin next Tuesday (http://www.meetup.com/OpenStack-Austin/events/44184682/).

We’ll have OpenStack fellow-up and general topics including planning our next meeting.

The primary topic for this meeting is Operating OpenStack. According to the group poll, the plan is to show an hands on OpenStack installation and peel back the covers on configuration.

I’m expecting to use Dell’s Crowbar tool to setup the Rackspace cloud builder distro. I’m hoping that someone can also show DevStack and some other installations.

It’s not too late to vote: http://www.meetup.com/OpenStack-Austin/polls/444322/

Thanks to Suse and Dell for sponsoring!

Note: If you’re the Boston Area, the next meetup there is 2/1

Details: http://www.foggysoftware.com/2011/12/openstack-in-community.html

February 1, 2012 at 6:30PM EST

Boston OpenStack User Group Meetup

Harvard University, Maxwell Dworkin Building, Rm 119, 33 Oxford St, Cambridge, MA

To register: http://www.meetup.com/Openstack-Boston/

2012: A year of Cloud Coalescence (whatever that means)

This post is a collaboration between three Dell Cloud activists: Rob Hirschfeld (@zehicle), Joseph B George (@jbgeorge) and Stephen Spector (@SpectoratDell).

We’re not making predictions for the “whole” Cloud market, this is a relatively narrow perspective based on technologies that on our daily radar. These views are strictly our own and based on publicly available data. They do not reflect plans, commitments, or internal data from our  employer (Dell).

employer (Dell).

The major 2012 theme is cloud coalescence. However, Rob worries that we’ll see slower adoption due to lack of engineers and confusing names/concepts.

Here are our twelve items for 2012:

- Open source continues to be a disruptive technology delivery model. It’s not “free” software – there’s an emerging IT culture that is doing business differently, including a number of large enterprises. The stable of sleeping giant vendors are waking up to this in 2012 but full engagement will take time.

- Linux. It is the cloud operating system and had a great 2012. It seems silly pointing this out since it seems obvious, but it’s the foundation for open source acceleration.

- Tight market for engineering and product development talent will get tighter. The catch-22 of this is that potential mentors are busy breaking new ground and writing code, making it hard for new experts to be developed.

- On track, OpenStack moves into its awkward adolescence. It is still gangly and rebelling against authority, but coming into its own. Expect to see a groundswell of installations and an expected wave of issues and challenges that will drive the community. By the “F” release, expect to see OpenStack cement itself as a serious, stable contender with notable public deployments and a significant international private deployment foot print.

- We’ll start seeing OpenStack Quantum (networking) in near-production pilots by year end. OpenStack Quantum is the glue that holds the big players in OpenStack Nova together. The potential for next generation cloud networking based on open standards is huge, but it will emerge without a killer app (OpenStack Nova in this case) pushing it forward. The OpenStack community will pull together to keep Quantum on track.

- Hadoop will cross into mainstream awareness as the need for big data analysis grows exponentially along with the data. Hadoop is on fire in select circles and completely obscure in others. The challenge for Hadoop is there are not enough engineers who know how to operate it. We suspect that lack of expertise will throttle demand until we get more proprietary tools to simplify analysis. We also predict a lot of very rich entrepreneurs and VCs emerging from this market segment.

- DevOps will enter mainstream IT discussions. Marketers from major IT brands will struggle and fail to find a better name for the movement. Our prediction is that by 2015, it will just be the way that “IT” is done and the name won’t matter.

- KVM continues to gain believers as the open source hypervisor. In 2011, I would not have believed this prediction but KVM making great strides and getting a lot of love from the OpenStack community, though Xen is also a key open source technology as well. I believe that Libvirt compatibility between LXE & KVM will further accelerate both virtualization approaches.

Big Data and NoSQL will continue to converge. While NoSQL enthusiasm as a universal replacement for structured databases appears to be deflating, real applications will win.

Big Data and NoSQL will continue to converge. While NoSQL enthusiasm as a universal replacement for structured databases appears to be deflating, real applications will win.- Java will continue to encounter turbulence as a software platform under Oracle’s overly heady handed management.

- PaaS continues to be a confusing term. Cloud players will struggle with a definition but I don’t think a common definition will surface in 2012. I think the big news will be convergence between DevOps and PaaS; however, that will be under the radar since most of the market is still getting educated on both of those concepts.

- Hybrid cloud will continue to make strides but will not truly emerge in 2012 – we’ll try to develop this technology, and expose gaps that will get us there ultimately (see PaaS and Quantum above)

Thoughts? We’d love to hear your comments.

Rob, JBG, and Stephen

You can follow Rob at www.RobHirschfeld.com or @zehicle on Twitter.

You can follow Joseph at www.JBGeorge.net or @jbgeorge on Twitter.

You can follow Stephen at http://en.community.dell.com/members/dell_2d00_stephen-sp/blogs/default.aspx or @SpectoratDell on Twitter.

Crowbar 1.2 released includes OpenStack Diablo Final

With the holiday rush, I neglected to post about Monday’s Crowbar v1.2 release (ISO here)!

With the holiday rush, I neglected to post about Monday’s Crowbar v1.2 release (ISO here)!

The core focus for this release was to support the OpenStack Diablo Final bits (which my employer, Dell, includes as part of the “Dell OpenStack Powered Cloud Solution“); however, we added a lot of other capability as we continue to iterate on Crowbar.

I’m proud of our team’s efforts on this release on both on features and quality. I’m equally delighted about the Crowbar community engagement via the Crowbar list server. Crowbar is not hardware or operating system specific so it’s encouraging to hear about deployments on other gear and see the community helping us port to new operating system versions.

We driving more and more content to Crowbar’s Github as we are working to improve community visibility for Crowbar. As such, I’ve been regularly updating the Crowbar Roadmap. I’m also trying to make videos for Crowbar training (suggestions welcome!). Please check back for updates about upcoming plans and sprint activity.

Crowbar Added Features in v1.2:

- Central feature was OpenStack Diablo Final barclamps (tag “openstack-os-build”)

- Improved barclamp packaging

- Added concepts for “meta” barclamps that are suites of other barclamps

- Proposal queue and ordering

- New UI states for nodes & barclamps (led spinner!)

- Install includes self-testing

- Service monitoring (bluepill)

Looking forward

Dell has a long list of pending Hadoop and OpenStack deployments using these bits so you can expect to see updates and patches matching our field experiences. We are very sensitive to community input and want to make Crowbar the best way to deliver a sustainable repeatable reference deployment of OpenStack, Hadoop and other cloud technologies.

OpenStack Deployments Abound at Austin Meetup (12/9)

I was very impressed by the quality of discussion at the Deployment topic meeting for Austin OpenStack Meetup (#OSATX). Of the 45ish people attending, we had representations for at least 6 different OpenStack deployments (Dell, HP, ATT, Rackspace Internal, Rackspace Cloud Builders, Opscode Chef)! Considering the scope of those deployments (several are aiming at 1000+ nodes), that’s a truly impressive accomplishment for such a young project.

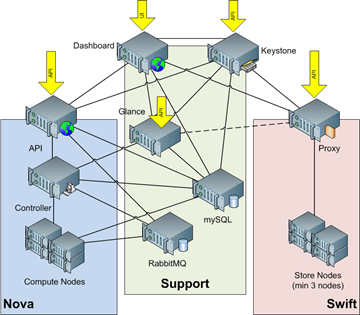

Even with the depth of the discussion (notes below), we did not go into details on how individual OpenStack components are connected together. The image my team at Dell uses is included below. I also recommend reviewing Rackspace’s published reference architecture.

Figure 1 Diablo Software Architecture. Source Dell/OpenStack (cc w/ attribution)

Notes

Our deployment discussion was a round table so it is difficult to link statements back to individuals, but I was able to track companies (mostly).

- HP

- picked Ubuntu & KVM because they were the most vetted. They are also using Chef for deployment.

- running Diablo 2, moving to Diablo Final & a flat network model. The network controller is a bottleneck. Their biggest scale issue is RabbitMQ.

- is creating their own Nova Volume plugin for their block storage.

- At this point, scale limits are due to simultaneous loading rather than total number of nodes.

- The Nova node image cache can get corrupted without any notification or way to force a refresh – this defect is being addressed in Essex.

- has setup availability zones are completely independent (500 node) systems. Expecting to converge them in the future.

-

Rackspace

- is using the latest Ubuntu. Always stays current.

- using Puppet to setup their cloud.

- They are expecting to go live on Essex and are keeping their deployment on the Essex trunk. This is causing some extra work but they expect it to pay back by allowing them to get to production on Essex faster.

- Deploying on XenServer

- “Devs move fast, Ops not so much.” Trying to not get behind.

- Rackspace Cloud Builders (RCB) is running major releases being run through an automated test suite. The verified releases are being published to https://github.com/cloudbuilders (note: Crowbar is pulling our OpenStack bits from this repo).

-

Dell commented that our customers are using Crowbar primarily pilots – they are learning how to use OpenStack

- Said they have >10 customer deployments pending

- ATT is using OpenSource version of Crowbar

- Need for Keystone and Dashboard were considered essential additions to Diablo

-

Hypervisors

- KVM is considered the top one for now

- Libvirt (which uses KVM) also supports LXE which people found to be interesting

- XenServer via XAPI are also popular

- No so much activity on ESX & HyperV

- We talked about why some hypervisors are more popular – it’s about the node agent architecture of OpenStack.

-

Storage

- NetApp via Nova Volume appears to be a popular block storage

-

Keystone / Dashboard

- Customers want both together

- Including keystone/dashboard was considered essential in Diablo. It was part of the reason why Diablo Final was delayed.

- HP is not using dashboard

- Members of the Audience made comments that we need to deprecate the EC2 APIs (because it does not help OpenStack long term to maintain EC2 APIs over its own). [1/5 Note: THIS IS NOT OFFICIAL POLICY, it is a reflection of what was discussed]

- HP started on EC2 API but is moving to the OpenStack API

Meetup Housekeeping

- Next meeting is Tuesday 1/10 and sponsored by SUSE (note: Tuesday is just for this January). Topic TBD.

- We’ve got sponsors for the next SIX meetups! Thanks for Dell (my employeer), Rackspace, HP, SUSE, Canonical and PuppetLabs for sponsoring.

- We discussed topics for the next meetings (see the post image). We’re going to throw it to a vote for guidance.

- The OSATX tag is also being used by Occupy San Antonio. Enjoy the cross chatter!

Extending Chef’s reach: “Managed Nodes” for External Entities.

Note: this post is very technical and relates to detailed Chef design patterns used by Crowbar. I apologize in advance for the post’s opacity. Just unleash your inner DevOps geek and read on. I promise you’ll find some gems.

Note: this post is very technical and relates to detailed Chef design patterns used by Crowbar. I apologize in advance for the post’s opacity. Just unleash your inner DevOps geek and read on. I promise you’ll find some gems.

At the Opscode Community Summit, Dell’s primary focus was creating an “External Entity” or “Managed Node” model. Matt Ray prefers the term “managed node” so I’ll defer to that name for now. This model is needed for Crowbar to manage system components that cannot run an agent such as a network switch, blade chassis, IP power distribution unit (PDU), and a SAN array. The concept for a managed node is that there is an instance of the chef-client agent that can act as a delegate for the external entity. We’ve been reluctant to call it a “proxy” because that term is so overloaded.

My Crowbar vision is to manage an end-to-end cloud application life-cycle. This starts from power and network connections to hardware RAID and BIOS then up to the services that are installed on the node and ultimately reaches up to applications installed in VMs on those nodes.

Our design goal is that you can control a managed node with the same Chef semantics that we already use. For example, adding a Network proposal role to the Switch managed node will force the agent to update its configuration during the next chef-client run. During the run, the managed node will see that the network proposal has several VLANs configured in its attributes. The node will then update the actual switch entity to match the attributes.

Design Considerations

There are five key aspects of our managed node design. They are configuration, discovery, location, relationships, and sequence. Let’s explore each in detail.

A managed node’s configuration is different than a service or actuator pattern. The core concept of a node in chef is that the node owns the configuration. You make changes to the nodes configuration and it’s the nodes job to manage its state to maintain that configuration. In a service pattern, the consumer manages specific requests directly. At the summit (with apologies to Bill Clinton), I described Chef configuration as telling a node what it “is” while a service provide verbs that change a node. The critical difference is that a node is expected to maintain configuration as its composition changes (e.g.: node is now connected for VLAN 666) while a service responds to specific change requests (node adds tag for VLAN 666). Our goal is the maintain Chef’s configuration management concept for the external entities.

Managed nodes also have a resource discovery concept that must align with the current ohai discovery model. Like a regular node, the manage node’s data attributes reflect the state of the managed entity; consequently we’d expect a blade chassis managed node to enumerate the blades that are included. This creates an expectation that the manage node appears to be “root” for the entity that it represents. We are also assuming that the Chef server can be trusted with the sharable discovered data. There may be cases where these assumptions do not have to be true, but we are making them for now.

Another essential element of managed nodes is that their agent location matters because the external resource generally has restricted access. There are several examples of this requirement. Switch configuration may require a serial connection from a specific node. Blade SANs and PDUs management ports are restricted to specific networks. This means that the manage node agents must run from a specific location. This location is not important to the Chef server or the nodes’ actions against the managed node; however, it’s critical for the system when starting the managed node agent. While it’s possible for managed nodes to run on nodes that are outside the overall Chef infrastructure, our use cases make it more likely that they will run as independent processes from regular nodes. This means that we’ll have to add some relationship information for managed nodes and perhaps a barclamp to install and manage managed nodes.

All of our use cases for managed nodes have a direct physical linkage between the managed node and server nodes. For a switch, it’s the ports connected. For a chassis, it’s the blades installed. For a SAN, it’s the LUNs exposed. These links imply a hierarchical graph that is not currently modeled in Chef data – in fact, it’s completely missing and difficult to maintain. At this time, it’s not clear how we or Opscode will address this. My current expectation is that we’ll use yet more roles to capture the relationships and add some hierarchical UI elements into Crowbar to help visualize it. We’ll also need to comprehend node types because “managed nodes” are too generic in our UI context.

Finally, we have to consider the sequence of action for actions between managed nodes and nodes. In all of our uses cases, steps to bring up a node requires orchestration with the managed node. Specifically, there needs to be a hand-off between the managed node and the node. For example, installing an application that uses VLANs does not work until the switch has created the VLAN, There are the same challenges on LUNs and SAN and blades and chassis. Crowbar provides orchestration that we can leverage assuming we can declare the linkages.

For now, a hack to get started…

For now, we’ve started on a workable hack for managed nodes. This involves running multiple chef-clients on the admin server in their own paths & processes. We’ll also have to add yet more roles to comprehend the relationships between the managed nodes and the things that are connected to them. Watch the crowbar listserv for details!

Extra Credit

Notes on the Opscode wiki from the Crowbar & Managed Node sessions

OpenStack Quantum Update – what I got wrong and where it’s headed

I’m glad to acknowledge that I incorrectly reported the OpenStack Quantum project would require licensed components for implementation! I fully stand behind Quantum as being OpenStack’s “killer app” and am happy to post more information about it here.

I’m glad to acknowledge that I incorrectly reported the OpenStack Quantum project would require licensed components for implementation! I fully stand behind Quantum as being OpenStack’s “killer app” and am happy to post more information about it here.

Side note: My team at Dell is starting to get Crowbar community pings about collaboration on a Quantum barclamp. Yes, we are interested!

This updates comes via Dan Wendlandt from Nicira who pointed out my error in the Seattle Meetup notes (you can read his comment on that post). Rather than summarize his information, I’ll let Dan talk for himself…

Dan’s comments about open source Quantum implementation:

There’s a full documentation on how to use Open vSwitch to implement Quantum (see http://docs.openstack.org/incubation/openstack-network/admin/content/ and http://openvswitch.org/openstack/documentation/), and [Dan] even sent a demo link out to the openstack list a while back (http://wiki.openstack.org/QuantumOVSDemo). Open vSwitch is completely open source and free. Some other plugins may require proprietary hardware and/or software, but there is definitely a (very) viable and completely open source option for Quantum networking.

Dan’s comments about Quantum OpenStack project status in the D-E-F release train:

At the end of the Diablo cycle, Quantum applied to become an incubated project, which means it will be incubated for Essex. At the end of the Essex cycle, we plan to apply to be a core project, meaning that if we are accepted, we would be a core project for the F-series release.

Its worth noting, however, that [Dan] knows of many people planning on putting Quantum in production before then, which is the real indicator of a project’s maturity (regardless of whether it is technically “core” or not).